Mip-Grid: Anti-aliased Grid Representations

for Neural Radiance Fields

NeurIPS 2023

Seungtae Nam1, Daniel Rho2, Jong Hwan Ko1,3, Eunbyung Park1,3

1Department of Artificial Intelligence, Sungkyunkwan University

2AI2XL, KT

3Department of Electrical and Computer Engineering, Sungkyunkwan University

Abstract

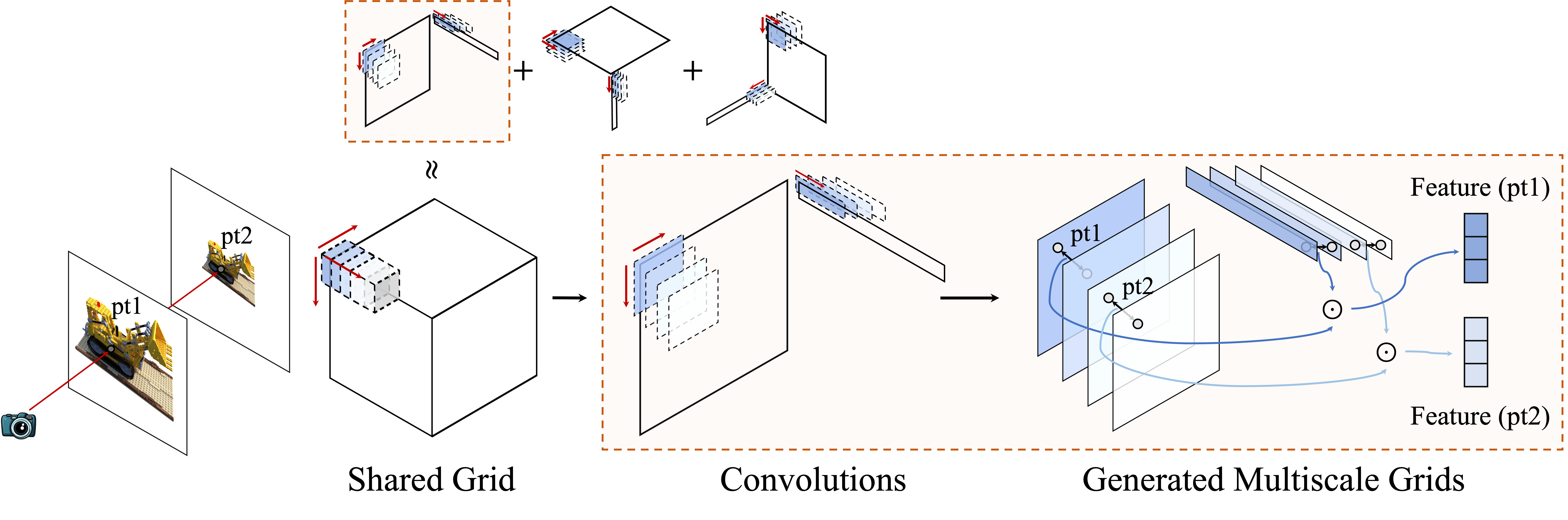

Despite the remarkable achievements of neural radiance fields (NeRF) in representing 3D scenes and generating novel view images, the aliasing issue, rendering “jaggies" or “blurry" images at varying camera distances, remains unresolved in most existing approaches. Mip-NeRF has addressed this challenge by rendering conical frustums instead of rays. However, it relies on MLP architecture to represent the radiance fields, missing out on the fast training speed offered by the latest grid-based methods. In this work, we present mip-Grid, a novel approach that integrates anti-aliasing techniques into grid-based representations for radiance fields, mitigating the aliasing artifacts while enjoying fast training time. The proposed method generates multiple grids by applying simple convolution operations over a single-scale shared grid representation and uses the scale-aware coordinate to retrieve features at different scales from the generated multiple grids. To test the effectiveness, we integrated the proposed method into the two recent representative grid-based methods, TensoRF and K-Planes. Experimental results show that mip-Grid greatly improves the rendering performance of both methods and even outperforms mip-NeRF on multi-scale datasets while achieving significantly faster training time.

Generated Multi-scale Grids and Learned Kernels

Qualitative Comparison on the Lego scene

| Left Model | Frame | Right Model |

Demo Video (please see in full-screen)

Bibtex

We used the project page of Fuzzy Metaballs as a template.